Machines can design machines? Yes, they can. This message is reinforced by the latest in reinforcement learning, the publication yesterday of an article in Nature. A team of Google and Stanford researchers describe a reinforcement learning system that has designed the next generation of tensor processing units. The paper itself was written by humans, and many at that: 20 authors, and another 15 people acknowledged for help and support.

What is the big deal? In the field of Electronic Design Automation, companies have been automating the design and testing of chips for decades. These companies have used a variety of optimization techniques, leading up to the current use of reinforcement learning. But the problem of chip design is daunting. Systems require frustrating cycles of parameter modification followed by days-long computer runs. Indeed, the way designers use automated design tools, and the frustration that ensues, has been the subject of research by the author and colleagues.

The chip design problem articulated in the Nature article is abstracted to the question of how to put 1000 items onto a landscape of 1000 resources. The authors point out that the state space is huge, on the order of 1000! This makes it far more complex than teaching a computer to learn games such as chess and go. Moreover, the authors assert that this problem is sufficiently general that its solution can apply to many other design domains. They mention urban planning, as well as vaccine testing and distribution. It is clear both of these involve the design of artificial structures and processes. Eerily, they also mention cerebral cortex layout. Brains have some of the same qualities as chip layouts: if modules are connected, they tend to be adjacent.

What is in the algorithm? The algorithm uses transfer learning: the algorithm can make use of past chip designs to inform new chip designs. That is, just as humans experienced in chip design don’t need to attack every chip as a brand new problem, the algorithm is informed by examples. Transfer learning is complex, in both its human and machine learning form: it relies on sensing general patterns through experience with many examples. In machine learning, this is called pre-training. In addition, the algorithm makes use of recent advances in graph neural networks. Such networks work explicitly on graph structures. The variant here is called an edge-based graph neural network. Embedded in the network are netlists, which represent the layout of blocks on a chip, including compute units and memory subsystems. The whole optimization process is called floor planning, as the blocks are assigned positions on two dimensional grids.

The optimization has multiple objectives: it tries to minimize the total wire length, while at the same time considering congestion, latency, density, power consumption, and area. The algorithm is compared to another algorithm, and to the human team that designed the first generation tensor processing unit. It produces better results. The algorithm took 6 hours to produce a better design than a human team that spent months.

Where does this go? One aspect of this system not discussed is the human effort involved in building the software. There must have been a considerable effort. Can that effort itself be done by machine? At Google and other research labs, there are efforts to develop automated machine learning: systems that design algorithms. Their first applications have been around finding appropriate neural architectures to solve particular problems. One can imagine reframing the problem of creating the next graph placement method into a form that the computer can try to solve. The design of chips and the design of algorithms to design chips are both combinatorial optimization problems that involve steering through vast state spaces.

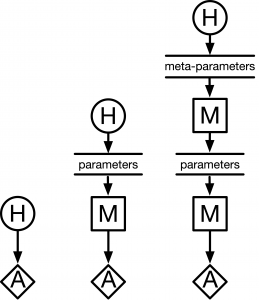

The reinforcement learning paradigm works if there are fast ways of generating and scoring examples. So one can expect attempts to continue to move the human up the meta stack, as in the figure: humans (H) design machines (M) that design artifacts (A) – unless or until the machines are stumped. Indeed, in the current issue, a Nature editorial expressed concern about the possible reduction of highly skilled human jobs as a result of the algorithm. For the time being, what we are more likely to see are skill shifts: the skills of designing chips become the skills of designing systems that design chips, and so on upwards.